7 Ways to Optimize Crawl Budget to Improve Your SEO

- Tips dan Trick

- 16 Aug 2024

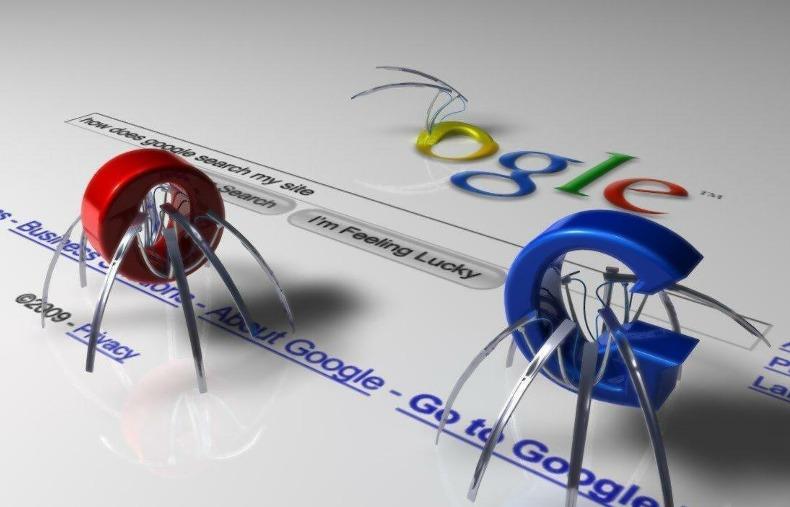

Website owners might be wondering, how do you get Google to like and index your website? The secret is very simple, direct crawlers or bots only to important and useful pages on your website. Of course, you want Google or Bing to index every URL on your website, but what about low-quality and irrelevant pages? You can prevent crawlers from crawling those pages by using a “Crawl Budget”.

What is “Crawl Budget”?

When Googlebot (or BingBot) crawls your website, it only has a limited number of pages to access. In other words, the crawl budget is the number of pages (URLs) that Google will crawl on a website. This number varies from day to day, but overall is relatively stable. The amount crawled is generally determined by the value or quality of the website (how many errors Google finds), including the quality of backlinks to your website.

Google doesn't always crawl every page immediately, it could even be every week. Sometimes it only crawls 6 pages, or 5000, even 100,000 pages depending on many factors. This is one of the efforts to improve your SEO.

Why should you pay attention to this?

Here are some benefits when you control the access of a crawler:

Gives Priority to Important Pages

When you set access to which pages Googlebot is allowed to crawl, it increases the likelihood that important and “flagship” pages will be crawled every time Googlebot visits your website.

For example, product or blog pages, these pages want to rank high in search results, so users can find information quickly.

Ignoring Pages That Don't Need to Rank

There are always web pages that don't need to be indexed in search results. This is a place where users are usually not looking for in-depth information but may browse to the page temporarily.

How to Help Googlebot Access the Right Pages?

There are many ways to help Googlebot or BingBot access a website. You can customize or implement some of the following ways:

1. Robots.txt File

The first thing to note is what settings are prohibited in the robots.txt file. In other words, the types of folders or files on your website that do not need to be explored. When visiting a website, the first place a crawler will look is your robots.txt file (provided it's located at www.domain.com/robots.txt). This will help show crawlers which URLs not to crawl. You can set rules for crawlers on which parts of your website to crawl.

Once you've done this, don't forget to always test your rules using Google Search Console's robot.txt checker tool to make sure they're working properly. Because some wrong rules can block entire sites or URLs that you don't want to block.

2. Noindex Tags

To prevent certain URLs from being indexed by Google, this method can help you. Add a noindex tag in the header code of the page. Once added, test this tag again by performing Fetch as Google as a URL request to be indexed in Google Search Console.

3. URL Parameter Rules

If your website uses a CMS (Content Management System) or e-commerce system, you should be careful because dynamically generated URLs cause page duplication. Googlebot can easily get stuck and waste time exploring these URLs.

You can use URL Parameters in Google Search Console to help distinguish dynamic URLs found by Google. But to use this tool, you must be careful because if you configure it incorrectly, it may result in the URL not being indexed by Google.

4. Up-to-date XML Sitemaps

Although Google does not take your XML sitemap as a rule in crawler browsing, it will be needed as a clue. So make sure your XML sitemap is up to date, which helps reinforce which pages should be indexed. Remove your old URLs (or unneeded pages) and add them with new URLs.

5. Fix Internal Links

Googlebot will explore links found within website content, so make sure you don't allow crawlers to browse to pages that are missing (broken links). Use an explorer tool like Screaming Frog's SEO Spider Tool to find broken internal links, so you can easily fix the source.

6. Page Load Times

During a visit, Googlebot always loads every page. By reducing the “load time” of the website, it allows crawlers to browse and index other pages in the same average time.

7. Site Structure

A good site structure is a method to help crawlers navigate your site much easier. With clear content categorization and not hiding pages too deep in the site structure, this can increase the likelihood that the page will be found by Googlebot.

So, what are the benefits for SEO?

If you have successfully implemented some or all of the above methods and tested with the mentioned tools, then you will see some changes in the crawl statistics listed in Google Search Console. So it can be easier to check the “health” of your website.

If Google crawls your “flagship” page frequently, it will probably rank better. New and “fresh” content will get indexed quickly and will rank much faster and won't waste time from your “crawl budget”.